記事一覧

- Anthropic(2024) “Introducing the next generation of Claude” 2024/3/4付けプレスリリース

- OpenAI(2024) “Hello GPT-4o”,2024/5/13

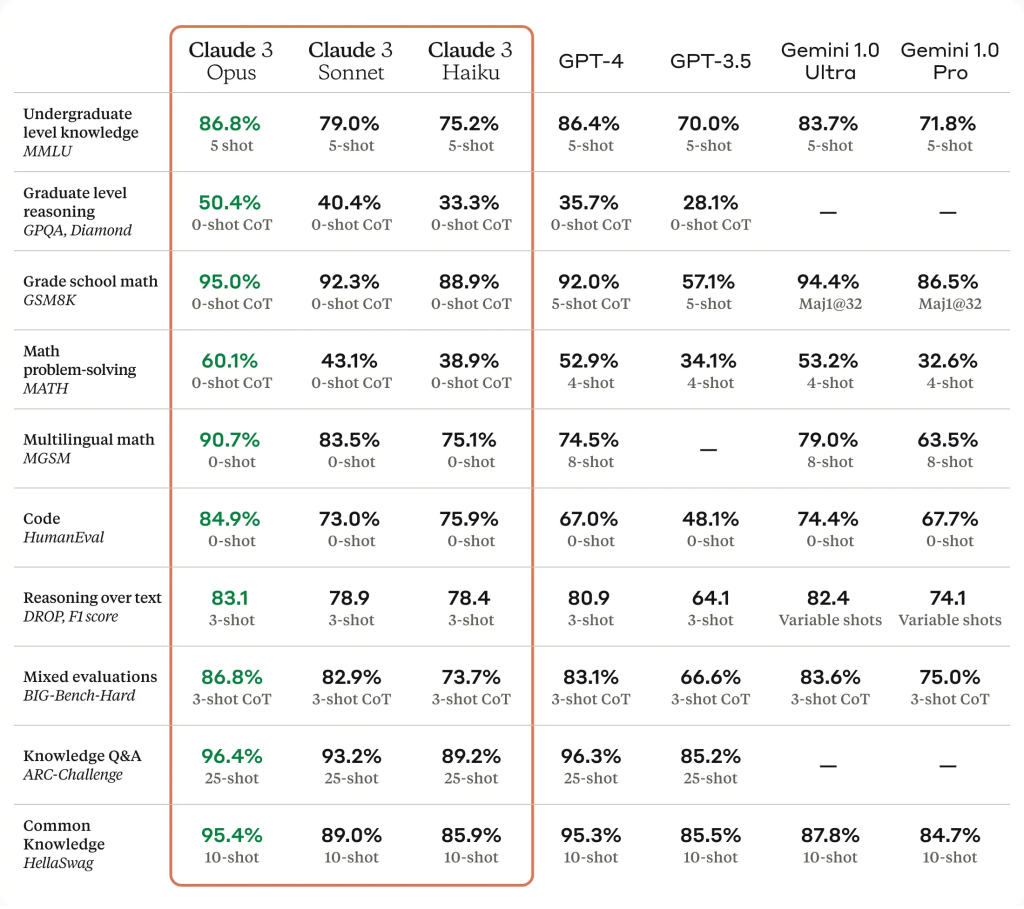

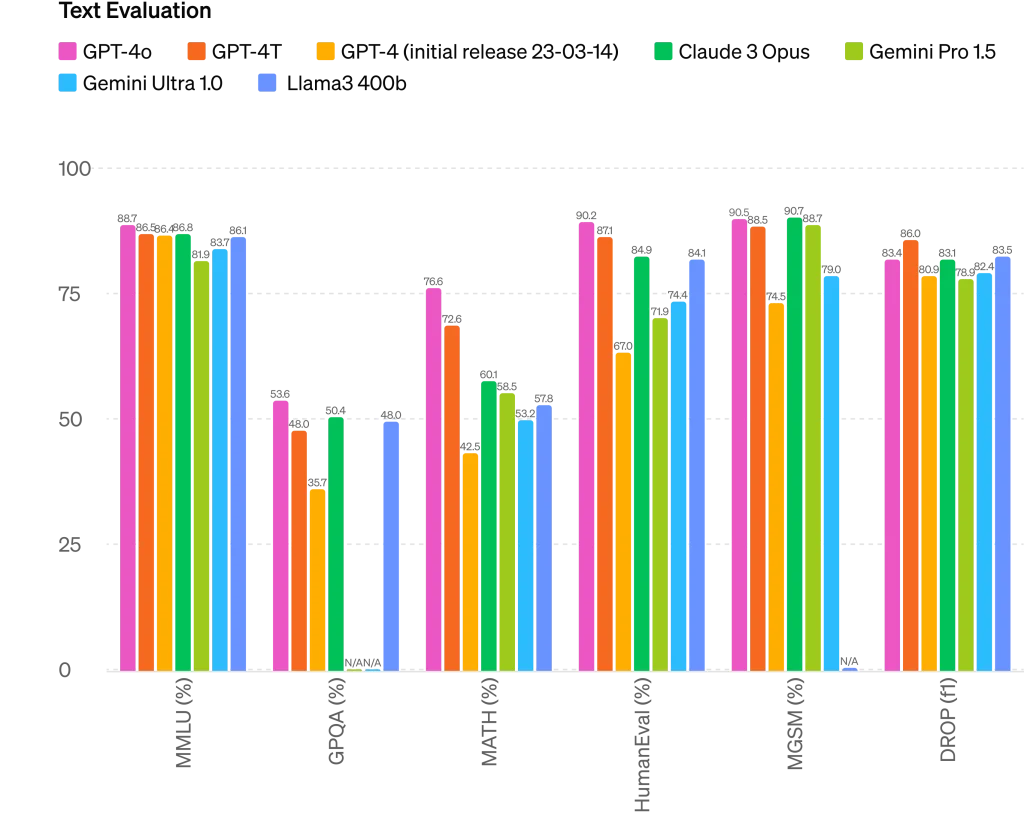

https://openai.com/index/hello-gpt-4o/本プレスリリースによると、ChatGPT4oの性能は、下記性能比較図に示されているように、全項目でClaude 3 Opusとほぼ同じかそれを上回る性能である。

- Perplexity AI(利用言語モデル:Claude3 Opus)によるGPT-4oとClaude3 Opusの性能比較の回答

ChatGPT-4oとClaude 3 Opusの性能比較について、提供された情報源から以下のような洞察が得られました。ベンチマークスコア:

– MMLU (大学レベルの知識テスト): Claude 3 Opusは88.2% (5-shot CoT)、GPT-4oは87.2% (5-shot no-CoT)でほぼ同等の高スコアを達成[1][14]

– MMMU (多分野・マルチモーダルベンチマーク): Claude 3 Opusは59.4%、GPT-4oはこれを上回る[14][18]

– HumanEval (コーディング): Claude 3 Opusは過去最高の68.4%を達成[17]価格:

– Claude 3 Opusはインプットトークン100万あたり$15、アウトプットは$75

– GPT-4oはClaude 3 Opusの半額でインプット$7.5、アウトプット$37.5[9][18]パフォーマンス:

– Claude 3 Opusのスループットは28.7トークン/秒

– GPT-4oはClaude 3 Opusの2倍の速度[9][16]コンテキストウィンドウ:

– Claude 3 Opusは200kトークン

– GPT-4oも同様に200kトークン[11][16]主な特徴:

– Claude 3 Opusは高度な推論、コーディング、多言語対応に優れる

– GPT-4oはオーディオ、ビジョン、マルチモーダル統合が強み[11][16]総合的に見ると、Claude 3 OpusとGPT-4oはMMLUなどの一般的なベンチマークでほぼ同等の高い性能を示しています。一方でGPT-4oはより安価で高速であり、オーディオ・ビジョン統合に優れています。用途に応じてコストパフォーマンスと必要な機能を考慮して選択するのが良いでしょう。ただし、これらのモデルは日々進化しているため、最新の情報を追跡し続けることが重要です。

Citations:

[1] https://community.openai.com/t/gpt4-comparison-to-anthropic-opus-on-benchmarks/726147

[2] https://www.geeky-gadgets.com/claude-3-opus-vs-chatgpt-4-2024/

[3] https://www.chatgptguide.ai/2024/03/31/chatgpt-4-vs-claude-3-opus-in-depth-analysis-and-practical-applications/

[4] https://www.youtube.com/watch?v=zb4R82ghgb8

[5] https://next.redhat.com/2024/05/16/evaluating-the-performance-of-large-language-models/

[6] https://thegradient.pub/understanding-evaluation-metrics-for-language-models/

[7] https://ttms.com/chatgpt-4o-as-a-content-creation-tool-how-does-it-differ-from-version-4-0/

[8] https://arxiv.org/abs/2310.13800

[9] https://artificialanalysis.ai/models/claude-3-opus

[10] https://research.aimultiple.com/large-language-model-evaluation/

[11] https://www.anthropic.com/news/claude-3-family

[12] https://www.reddit.com/r/ChatGPTPro/comments/1bo5i5o/chatgpt_vs_claude_opus_for_coding/

[13] https://www.linkedin.com/pulse/evaluating-large-language-models-llms-standard-set-metrics-biswas-ecjlc

[14] https://community.openai.com/t/gpt-4-turbo-and-gpt-4-o-benchmarks-released-they-do-well-compared-to-the-marketplace/744528

[15] https://www.youtube.com/watch?v=pGAm1ByySso

[16] https://openai.com/index/hello-gpt-4o/

[17] https://www.vellum.ai/blog/claude-3-opus-vs-gpt4-task-specific-analysis

[18] https://context.ai/compare/claude-3-opus/claude-instant-v1

[19] https://learn.microsoft.com/en-us/ai/playbook/technology-guidance/generative-ai/working-with-llms/eval-metrics

[20] https://elblog.pl/2024/05/13/openai-sets-new-benchmark-with-chatgpt4o-outshining-traditional-voice-assistants/- ChatGPT4OによるGPT-4oとClaude3 Opusの性能比較の回答

https://chatgpt.com/share/e935b89e-ddae-4891-b332-fbeafc53e5e1Here is a comparative analysis of the performance of GPT-4o and Claude 3 Opus based on several benchmarks and tasks:Performance Benchmarks

1. Mathematical Problem Solving:

– GPT-4o: Scores 52.9% in MATH benchmarks, demonstrating strong performance in mathematical reasoning and problem-solving.

– Claude 3 Opus: Scores 60.1% in MATH benchmarks, slightly outperforming GPT-4o in this category【9†source】【10†source】.2. Summarization Tasks:

– GPT-4o: Provides detailed and accurate summaries, capturing the essence of the content comprehensively.

– Claude 3 Opus: Tends to provide shorter, more general summaries, which might miss some detailed aspects【7†source】【8†source】.3. Text Generation:

– GPT-4o: Excels in generating structured and formatted text, making it easier to read and follow.

– Claude 3 Opus: Also performs well but may not be as structured as GPT-4o【8†source】.4. General Knowledge and Reasoning:

– GPT-4o: Handles general knowledge questions and complex reasoning tasks effectively, often providing more nuanced and detailed responses.

– Claude 3 Opus: Performs competently but may struggle with certain reasoning tasks compared to GPT-4o【8†source】.5. Follow User Instructions:

– Claude 3 Opus: Particularly strong in following complex user instructions and generating appropriate responses based on those instructions.

– GPT-4o: Also follows instructions well but might not match the precision of Claude 3 Opus in some complex scenarios【9†source】【10†source】.Cost and Accessibility

– GPT-4o: Generally more affordable, with costs around $10 per million input tokens and $30 per million output tokens.

– Claude 3 Opus: More expensive, costing $15 per million input tokens and $75 per million output tokens【10†source】.Conclusion

While both GPT-4o and Claude 3 Opus have their strengths, GPT-4o tends to outperform Claude 3 Opus in tasks requiring detailed summaries and complex reasoning. However, Claude 3 Opus excels in specific areas such as following complex instructions and some mathematical tasks. The choice between the two may depend on specific use cases and budget considerations.

For more detailed performance metrics and task-specific comparisons, you can refer to sources such as Vellum.ai, FavTutor, and Beebom【6†source】【7†source】【9†source】【10†source】.

- Perplexity AI(利用言語モデル:Claude3 Opus)によるGPT-4oとClaude3 Opusの性能比較の回答

https://www.anthropic.com/news/claude-3-family

Claude 3 Haiku, Claude 3 Sonnet, and Claude 3 Opusという3種類のLLMの紹介。下記引用図のように、3種類の中で最も高性能なClaude3 Opusは、全項目において、競合製品のGPT-4やGemini1.0 Ultraと同じか、それらを高く上回る性能を示している。